- Self-reflection: Take time to reflect on your thoughts, feelings, and behaviors. Identify areas you would like to change and set specific goals to work on them.

- Self-awareness: Increase your awareness of how your actions and emotions affect others.

- Empathy: Practice putting yourself in other people's shoes to understand their perspectives.

- Communication skills: Work on improving your communication skills, both verbal and nonverbal.

- Emotional intelligence: Develop your emotional intelligence by learning how to identify, understand, and manage your own emotions, as well as the emotions of others.

- Social skills: Practice social skills such as active listening, making small talk, and building rapport with others.

- Self-discipline: Develop self-discipline by setting goals and working towards them consistently.

- Flexibility: Learn to be open-minded and adaptable to new situations.

- Resilience: Build your resilience to handle stress and bounce back from setbacks.

- Mindfulness: Practice mindfulness to become more present in the moment and reduce stress.

There are many ways to earn money online, and the best method for you will depend on your skills and interests. Some popular options include:

- Starting a blog or website and using affiliate marketing to promote other people's products.

- Selling products or services on platforms like Etsy or Amazon.

- Creating and selling online courses or e-books.

- Starting a YouTube channel and monetizing your content through ads or sponsorships.

- Trading cryptocurrencies or participating in online surveys.

- Freelancing in a skill or talent you have such as writing, programming, graphic design or consulting

With the exponential outburst of AI, companies are eagerly looking to hire skilled Data Scientists to grow their business. Apart from getting a Data Science Certification, it is always good to have a couple of Data Science Projects on your resume. Having theoretical knowledge is never enough

Topics covered in this article:

- steps in the life cycle of data science project

- some websites where you can get projects and data set

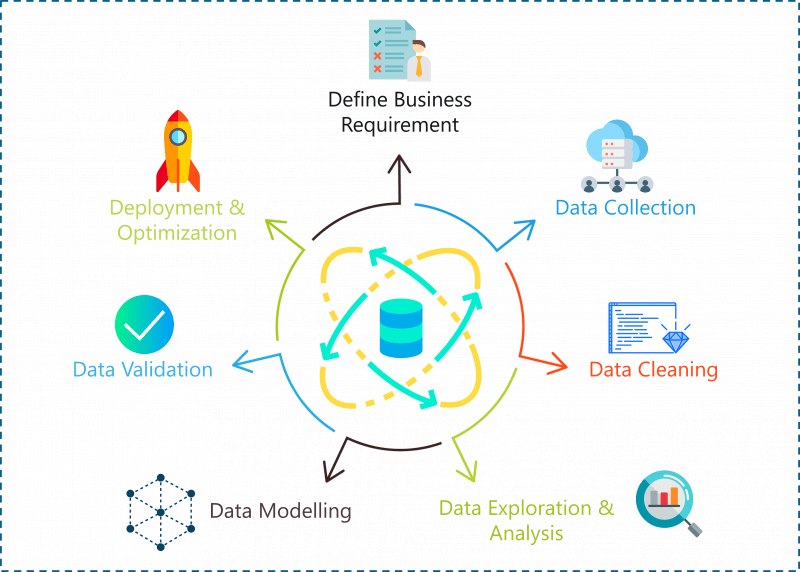

Data science project life cycle

-->Given the right data, Data Science can be used to solve problems ranging from fraud detection and smart farming to predicting climate change and heart diseases. With that being said, data isn’t enough to solve a problem, you need an approach or a method that will give you the most accurate results. This brings us to the question:

How Do You Solve Data Science Problems?

A problem statement can be solved using the following steps:-

- Define the problem statement/business requirment

- Data Collection

- Data Cleaning

- Data preprocessing(feature engineering)

- Exploratory Data Analysis(EDA)

- Data Modelling

- Hyper parameter tunning

let's look at the each steps in detail:-

- step1:Defining the problem statement

- Before you even begin a Data Science project, you must define the problem you’re trying to solve. At this stage, you should be clear with the objectives of your project.

- step2:Data collection

- Like the name suggests at this stage you must acquire all the data needed to solve the problem. Collecting data is not very easy because most of the time you won’t find data sitting in a database, waiting for you. Instead, you’ll have to go out, do some research and collect the data or scrape it from the internet.

- step3:Data cleaning

- Here comes the main task in which a data scientist spends a good amount of time in data cleaning .So your ultimate accuracy of the model depends on how clean is your data.Data cleaning is the process of removing redundant, missing, duplicate and unnecessary data. This stage is considered to be one of the most time-consuming stages in Data Science. However, in order to prevent wrongful predictions, it is important to get rid of any inconsistencies in the data.

- step4:Data preprocessing

- This step involves various techniques of data preprocessing like feature scaling,normalization,standardization and etc

- step5:Exploratory data analysis

- Once you’re done cleaning the data, it is time to get the inner Sherlock Holmes out. At this stage in a Data Science life-cycle, you must detect patterns and trends in the data. This is where you retrieve useful insights and study the behavior of the data. At the end of this stage, you must start to form hypotheses about your data and the problem you are tackling.you will definetly enjoy doing this task.

- step6:Data modelling

- This stage is all about building a machine learning or a deep learning model .This stage always begins with a process called Data Splicing, where you split your entire data set into two proportions. One for training the model (training data set) and the other for testing the efficiency of the model (testing data set).

- step7:Data optimization

- This is the final stage in the data science project life cycle. In this stage you have to apply some hyper parameter tunning techniques to tune your model and improve the accuarcy of the model .In this process I will use some of the effective techniques to perform hyper parameter tunning like Grid search cv and Randomized search cv .In case of deep learning model like ANN I will change the weights of the neural links(to know more about neural networks refer by article on ANN deep learning )

We all machine learning and data science aspirants must have participated in hackathons to test our skills in Machine Learning sometime or the other. Well, Some problem statement that we need to solve could be related to regression and some could be on classification. Let’s suppose, we are in one, and we have done all the hard work of pre-processing the data, worked hard on generating new features, applied them to our data to get the base model

So now,we are ready with our base model and want to test our algorithm on test data or outside data, and we feel that the accuracy we got is somewhat okayish!!.In that case we want to increase the accuracy of the model by method called hyper-parameter tunning

So now what are this parameters and hyperparameters?

Model parameters are the properties of the training data that are learnt during training by the classifier or other ML models.Model parameters differ for each experiment and depend on the type of data and task at hand.

Model hyperparameters are common for similar models and cannot be learnt during training but are set beforehand.

- Now let's see the hyper parameter tunning techniques involved in machine learning and deep learning:-

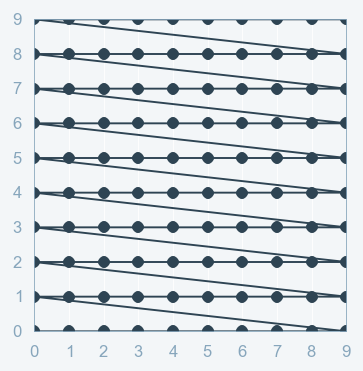

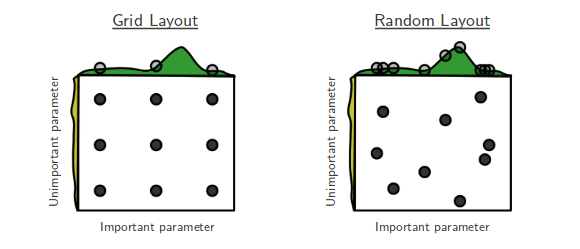

- -->Grid Search:- As the name indicates, Grid-searching is the process of scanning the data to configure optimal parameters for a given model in the grid. What this means is that the parameters search is done in the entire grid of the selected data.

- This is very important as the whole model accuracy depends on the hyper parameter optimization

#Example of Grid Search

# Load the dataset

x, y = load_dataset()

# Create model for KerasClassifier

def create_model(hparams1=dvalue, hparams2=dvalue, ... hparamsn=dvalue):

...

model = KerasClassifier(build_fn=create_model)

# Define the range

hparams1 = [2, 4, ...]

hparams2 = ['elu', 'relu', ...]

...

hparamsn = [1, 2, 3, 4, ...]

# Prepare the Grid

param_grid = dict(hparams1=hparams1,

hparams2=hparams2,

...

hparamsn=hparamsn)

# GridSearch in action

grid = GridSearchCV(estimator=model,

param_grid=param_grid,

n_jobs=,

cv=,

verbose=)

grid_result = grid.fit(x, y)

# Show the results

print("Best: %f using %s" % (grid_result.best_score_, grid_result.best_params_))

means = grid_result.cv_results_['mean_test_score']

stds = grid_result.cv_results_['std_test_score']

params = grid_result.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):

print("%f (%f) with: %r" % (mean, stdev, param))

One point to remember while performing Grid Search is that the more parameters we have, the more time and space will be taken by the parameters to perform the search. This is where the Curse of Dimensionality comes to picture too. This means the more dimensions we add, the more the search will explode in time complexity

NOTE:-use grid search technique if you have less number of dimension otherwise it will take plenty of time for execution to finsh

- -->Randomized search:-Another type of Hyperparameter tuning is called Random Search. Random Search does its job of selecting the parameters randomly. It is similar to Grid Search, but it is known to yield better results than Grid Search.

```

## Example of Random Search

# Load the dataset

X, Y = load_dataset()

# Create model for KerasClassifier

def create_model(hparams1=dvalue, hparams2=dvalue, ... hparamsn=dvalue):

...

model = KerasClassifier(build_fn=create_model)

# Specify parameters and distributions to sample from

hparams1 = randint(1, 100)

hparams2 = ['elu', 'relu', ...]

...

hparamsn = uniform(0, 1)

# Prepare the Dict for the Search

param_dist = dict(hparams1=hparams1,

hparams2=hparams2,

...

hparamsn=hparamsn)

# Search in action!

n_iter_search = 16 # Number of parameter settings that are sampled.

random_search = RandomizedSearchCV(estimator=model,

param_distributions=param_dist,

n_iter=n_iter_search,

n_jobs=,

cv=,

verbose=)

random_search.fit(X, Y)

# Show the results

print("Best: %f using %s" % (random_search.best_score_, random_search.best_params_))

means = random_search.cv_results_['mean_test_score']

stds = random_search.cv_results_['std_test_score']

params = random_search.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):

print("%f (%f) with: %r" % (mean, stdev, param))

```

That's it with this article guys .Do give a like if you find it interesting and motivate me to write further